Data parsing is the process of extracting relevant information from unstructured data sources and transforming it into a structured format that can be easily analyzed. A data parser is a software program or tool used to automate this process.

Parsing is a crucial step in data processing, as it enables businesses to efficiently manage and analyze vast amounts of data. By utilizing their own parser, businesses can customize their data parsing process to meet their specific needs and extract the most valuable insights from their data.

Unstructured data, such as text files or social media posts, can be difficult to work with due to their lack of organization. However, with the use of a data parser, this data can be transformed into structured data, which is organized into a specific format that is easily analyzed.

In this blog post, we will explore data and parsing technologies in more detail, examining the benefits of using a data parser and how it can help businesses and data analysts make informed decisions based on structured data.

Why Is Data Parsing Important?

Data parsing is extracting helpful information from a particular data format, such as CSV, XML, JSON, or HTML. Our previous blog post introduced data parsing and discussed its importance in today's big data-driven world. In this post, we'll dive deeper into data parsing, data parsers, and how to create your own data parser.

A data parser is a software tool that reads and analyzes data in a particular format, extracts specific information from converted data, and converts it into a more usable form. Many data parsers are available, such as Beautiful Soup, lxml, and csvkit. These various data extraction tools are handy for analyzing large amounts of data quickly and efficiently. For instance, if you're working with XML data, you may need to import XML to MySQL to ensure seamless database integration and efficient querying.

However, you may need to create your own data parser if you're dealing with interactive data, natural language processing, or a particular data format without an existing parser. Creating your parser can be a daunting task, but it can be a valuable skill, primarily if you work in a field requiring extensive data analysis.

To create your own data parser, you'll need programming skills, knowledge of the data format you're buying a data parser is working with, and an understanding of parsing. Once created, you can extract the specific information you need from your data, whether for market research, data analysis, or any other purpose.

One advantage of creating your parser is that it can be customized to meet your needs. You can tailor it to extract only the needed information, saving time and resources. Additionally, you can add or modify new features as your needs change.

How Does Data Parsing Work?

At its core, data parsing involves taking a large set of data and breaking it down into smaller, more manageable pieces. These smaller pieces can then be analyzed and manipulated as needed. To do this, a data parser is used. A data parser is a software tool that converts raw data into a structured, readable format that other programs or applications can more easily process.

Many different types of data parsers are available, each designed to work with a particular data format. For example, some data parsers are designed to work with XML files, while others are designed to work with JSON or CSV files. Some parsers can also handle multiple formats.

Consider developing your data parser if you need to work with a particular data format. This can be done using a programming language like Python or Java; many resources are available online to help you get started. By creating your parser, you can ensure that it is tailored to your specific needs and can handle any unique challenges or quirks that may come up.

Once you have a data parser, the actual parsing process can begin. The first step is to feed the raw data into the parser. This can be done by importing a file or sending data directly to the parser through an API. The parser will then break the data into smaller pieces based on the rules and patterns it has been programmed to follow.

During the parsing process, the data parser may perform additional tasks, such as data validation or transformation. For example, it may check to ensure that the data is in the correct format and that there are no missing fields. It may also convert data from one format to another, such as converting data such as a date from a string to a date object.

Data parsing involves extracting relevant information from unstructured data sources and transforming it into a structured format. One of the most effective tools for this process is an API for web scraping. Using such an API, businesses can automate data extraction from various websites, making the parsing process more efficient and scalable a web scraping tool can complement APIs by offering a user-friendly solution for handling specific data extraction needs, especially for businesses dealing with diverse data sources.

Once the data parsing solution and process are complete, the parsed data can be output in various formats, depending on your needs. For example, you can output the parsed data as a CSV file, a JSON object, or an XML document. The data can then be used for various purposes, including market research, data analysis, or building new applications using web data.

In conclusion, data parsing semantic analysis is a critical process that allows us to extract valuable insights and information from complex data sets. Using a data parser, we can break down large data sets into smaller, more manageable pieces of easily readable data, which can then be processed and analyzed as needed. Whether you buy a data parser or develop your own, this powerful tool can help you unlock the full potential of your data.

Types of Data Parsing Techniques

Since data parsing is extracting structured data from unstructured or semi-structured data sources, it involves breaking the data into smaller pieces to identify and extract the relevant information. Several types of data parsing techniques are used in various applications. Here, we will discuss some of the most common data parsing techniques.

String Parsing

String parsing is the most basic type of data parsing technique. It involves breaking down a string of characters into smaller substrings to extract the relevant information. This technique is often used in simple text parsing applications, such as searching for specific keywords in a document or extracting information from a URL.

Regular Expression Parsing

Regular expression parsing is a more advanced type of data parsing technique that involves the use of regular expressions to extract information from unstructured or semi-structured data sources. Regular expressions are a sequence of characters that define a search pattern. They can be used to search for specific patterns of characters, or such data as phone numbers or email addresses, in a text document.

XML Parsing

XML parsing is a type of data parsing technique that is used to extract information from XML documents. XML is a markup language that is used to store and transport data between systems. XML parsing involves breaking down the XML document into its individual elements and attributes to extract the relevant information.

JSON Parsing

JSON parsing is similar to XML parsing but is used to extract information from JSON documents. JSON is a lightweight data interchange format that is commonly used in web applications. JSON parsing involves breaking down the JSON document into its individual key-value pairs to extract the relevant information.

HTML Parsing

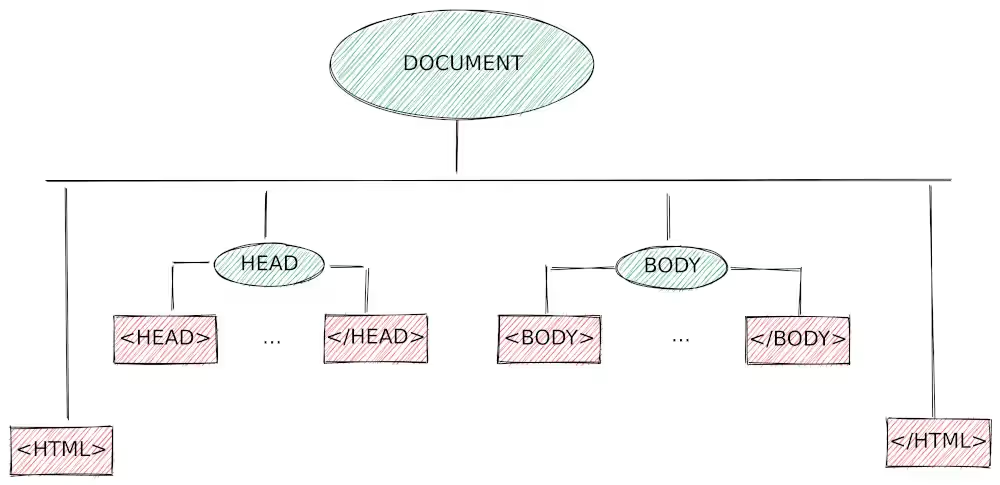

HTML parsing is a type of data parsing technique that is used to extract information from HTML documents. HTML is a markup language that is used to create web pages. HTML parsing involves breaking down the raw HTML document into its individual tags and attributes to extract the relevant information.

Scripting Language Parsing

Scripting language parsing is a more advanced type of data parsing technique that involves the use of scripting languages, such as Python or JavaScript, to extract information from unstructured or semi-structured data sources. Scripting language data parsing technologies involves writing custom scripts to analyse and extract relevant information.

Data parsing is a critical process in data analysis and information retrieval. The techniques discussed here are just a few examples of the many types of data parsing techniques used in various applications.

Whether you are parsing data from a particular data format or you are building your own parser or data parsing tool, it is important to understand the different types of data parsing techniques and their applications. By understanding the various data-driven data parsing processes, you can convert data into a more readable format that is more usable and meaningful for your market research or other data-driven applications.

Best Practices for Data Parsing

The process of data parsing is essential for businesses, researchers, and developers to understand the data, draw insights and make informed decisions. To ensure accurate and efficient parsing, here are some best practices to consider.

Determine the Data Format

The first step in data parsing is to determine the data format. This helps identify the structure and organization of the data. Data can come in various forms, such as text, HTML, XML, JSON, CSV, and more. Understanding the format enables the use of the output data with appropriate parsing tools and techniques.

Choose the Right Parsing Tool

After identifying the data format, select the appropriate data parsing tool that can handle the specific format. Several tools are available, including open-source and commercial data parsers. Evaluating the tools based on performance, accuracy, compatibility, and ease of use is essential before selecting the most suitable one.

Test the Parser

Testing the parser helps to ensure that it accurately and efficiently extracts data. It's essential to try the parser on different data types to check for errors and inconsistencies. Additionally, testing the parser helps identify performance issues and improve efficiency.

Handle Errors Gracefully

Data parsing can be prone to errors due to inconsistencies in the data, data corruption, or incorrect data formats. Handling these errors gracefully is vital to avoid crashing the parser or system. One approach is to use exception handling to detect errors and respond appropriately, such as by logging the errors, retrying the operation, or providing feedback to the user.

Optimize Performance

Parsing large volumes of data can be time-consuming and resource-intensive. Therefore, optimizing the parser's performance is essential to improve efficiency. This can be achieved by using caching mechanisms, multithreading, and reducing the number of I/O operations.

Maintain Flexibility

Data parsing requirements may change due to new data formats, sources, or business needs. Therefore, it's essential to maintain flexibility in the parser to adapt to these changes. This can be achieved by using modular designs, separating concerns, and configuring files for easy modifications.

Document the Process

Documenting the parsing process is critical to ensure it can be reproduced, maintained, and improved over time. This includes documenting the data format, parser tool, testing results, error handling, performance optimizations, and any modifications made to the parser.

Common Data Parsing Challenges and How to Overcome Them

Data parsing can be a complex process, and several challenges can arise during the parsing process. This section will discuss some common data parsing challenges and provide solutions to overcome them.

Inconsistent data formats

One of the most common challenges in data parsing is inconsistent data formats. When data is received from different sources, it can be in various formats, making it challenging to parse. This can lead to parsing errors or missing data.

Solution: Use a flexible data parser that can handle different data formats. Data parsers can be programmed to take HTML format or recognize other formats and convert them into a consistent format. It is also essential to perform thorough data analysis and understand the data's structure before parsing.

Missing or incomplete data

Another challenge in data parsing is missing data structures or incomplete data. Data may be missing, or some fields may contain null values, leading to incorrect interpretation.

Solution: Use a data parser that can handle missing or incomplete data. Data parsers can be programmed to recognize null values and fill in missing or unreadable data with default values or placeholders. It is also essential to validate data and verify that the parsed data is complete and accurate.

Parsing performance

Data parsing can be time-consuming, especially when dealing with large datasets. Parsing performance can be challenging when dealing with real-time data streams, where data needs to be parsed quickly.

Solution: Use a fast data parser to handle large datasets and real-time data streams. Optimizing the parsing process and avoiding unnecessary steps that can slow down the parsing performance is also essential.

Parsing errors

Data parsing errors can occur for various reasons, including syntax errors, data format errors, and parsing logic errors.

Solution: Use a data parser that provides error-handling capabilities. Data parsers can be programmed to handle syntax errors and provide error messages to help debug the parsing process. Valuing data and ensuring the parsed data meets the expected data format is also essential.

Conclusion

In conclusion, data parsing is a critical process used in various industries to extract valuable insights from large data sets. By using data parsing techniques, companies can convert raw data into a structured format that is easier to analyze and use for decision-making. However, data parsing has several challenges, such as dealing with different data formats and handling errors. Companies can overcome these challenges by adopting best practices such as thorough testing, maintaining good documentation, and utilizing practical data parsing tools. By doing so, they can unlock the full potential of their data and make informed business decisions.

Unlock the power of glocalization with our Translation Management System.

Unlock the power of

with our Translation Management System.

.avif)